Adversarial Attacks in AIOps

Research on security vulnerabilities in AI-driven operations systems and defense mechanisms against adversarial attacks.

Project Overview

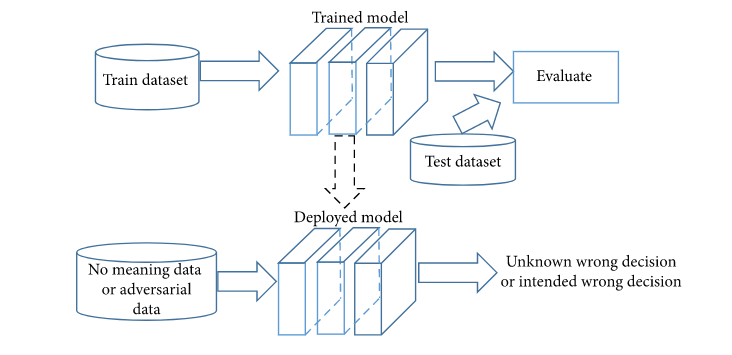

This research project investigates security vulnerabilities in AI-driven Operations (AIOps) systems, specifically focusing on adversarial attacks that can compromise system reliability and performance.

Research Objectives

- Identify key vulnerability patterns in AIOps systems

- Develop adversarial examples that can exploit these vulnerabilities

- Create defensive mechanisms to detect and mitigate attack vectors

- Establish best practices for securing AI-driven operations

Key Findings

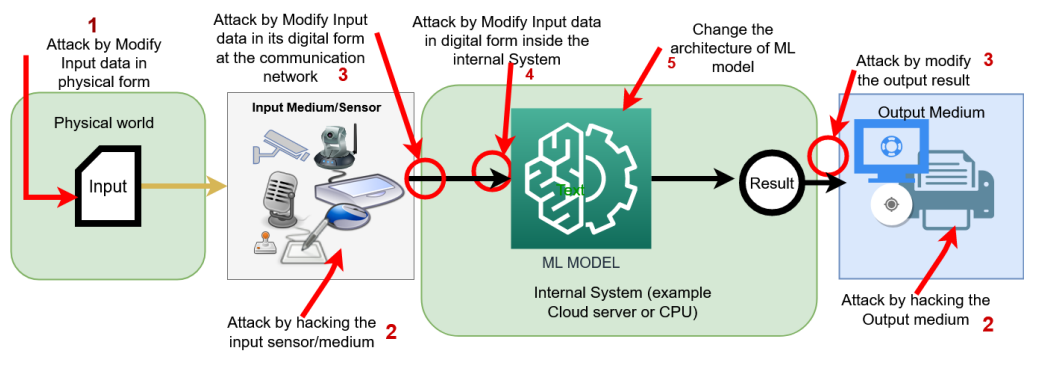

Generalized adversarial attack framework for AIOps systems

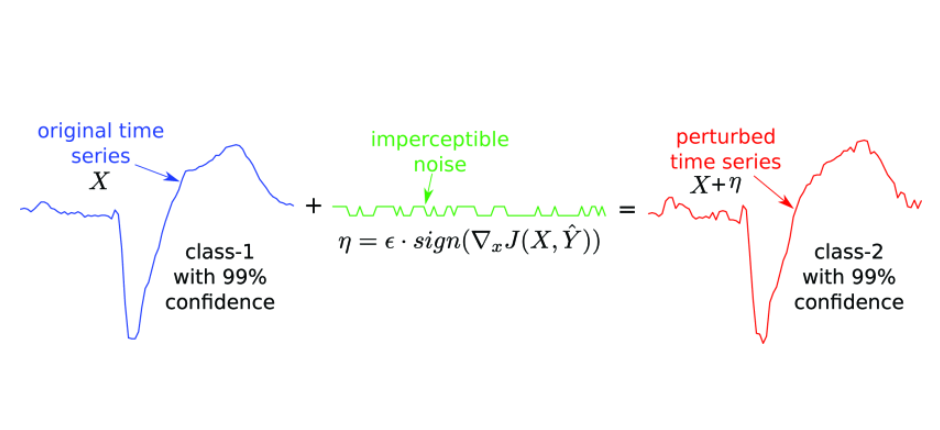

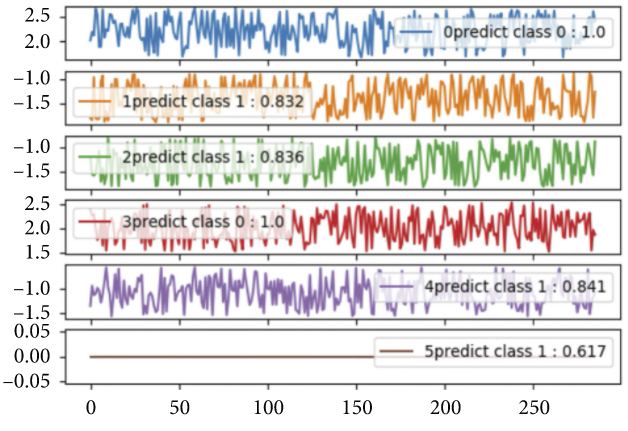

Time series misclassification attack demonstration

Common Vulnerability Patterns

Through systematic testing, we identified several vulnerability patterns in AIOps systems:

- Feature Space Manipulation: Slight modifications to system metrics that cause misclassification

- Time Series Poisoning: Gradual injection of adversarial patterns that avoid anomaly detection

- Transfer Attacks: Exploiting one model's weaknesses to attack another

- Decision Boundary Exploitation: Finding and targeting classification thresholds

Defense Mechanisms Developed

Our research led to several defensive approaches that significantly improved robustness:

- Adversarial Training: Incorporating adversarial examples into training data

- Ensemble Methods: Using multiple models with different architectures to increase robustness

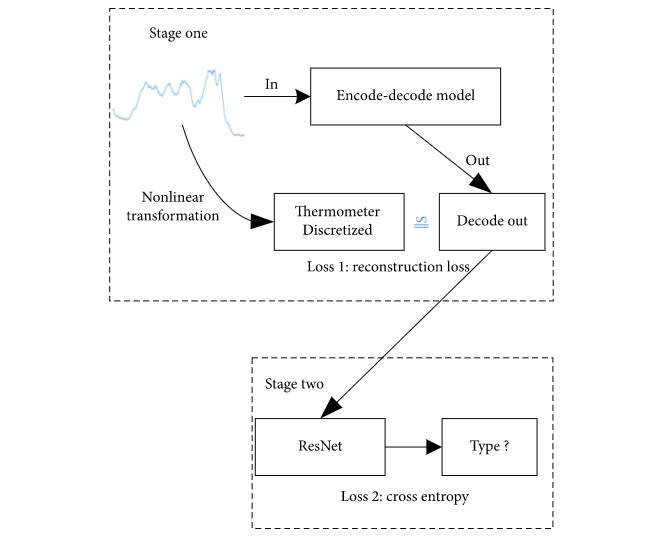

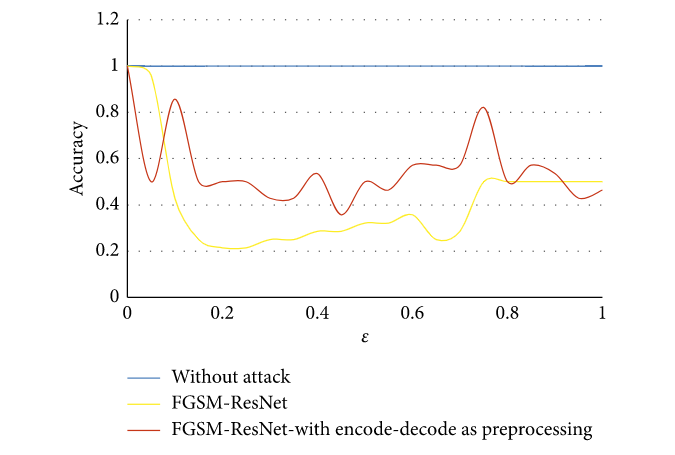

- Input Preprocessing: Applying transformations that preserve legitimate patterns but disrupt adversarial ones

- Runtime Monitoring: Implementing secondary detection systems to identify potential attacks

Attack Demonstrations

Random noise attack methodology and implementation

Attack success rates and performance impact analysis

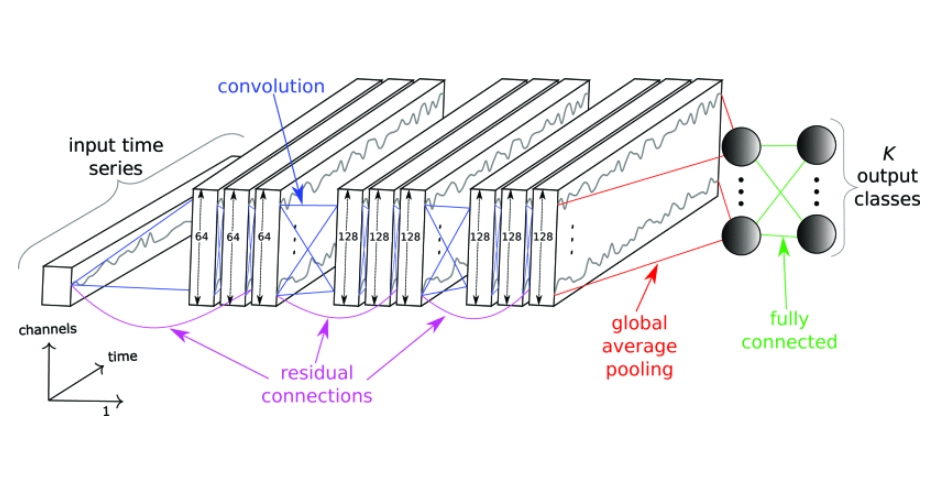

Encoder-decoder architecture for sophisticated attack generation

Comparative results showing encoder-decoder attack effectiveness

Implementation

Technologies Used

- Python for adversarial example generation and testing

- TensorFlow and PyTorch for model implementation

- Prometheus and Grafana for metrics collection and visualization

- Custom attack simulation framework for systematic vulnerability testing

Evaluation Methodology

We evaluated defense mechanisms using:

- Success rate of adversarial attacks before and after implementing defenses

- False positive/negative rates for anomaly detection

- Performance overhead of defensive measures

- Recovery time after successful attacks

Results and Impact

Our research demonstrated that even sophisticated AIOps systems can be vulnerable to carefully crafted adversarial attacks. However, implementing the proposed defensive mechanisms reduced successful attack rates by over 85% while maintaining system performance.

Key Contributions

- Taxonomy of adversarial attack patterns specific to AIOps systems

- Open-source toolkit for vulnerability assessment

- Multi-layered defense strategy blueprint for operational AI systems

- Performance benchmarks for security-critical AIOps deployments

Conclusion

This research provides a foundation for securing AI-driven operations against adversarial attacks. Future work will focus on developing more sophisticated defense mechanisms, exploring real-time adaptation strategies, and extending our findings to other domains of operational AI.

For more details on this research or to collaborate on AI security projects, please reach out via the contact information provided.